Artificial Intelligence

SpiraTest's artificial intelligence functionality empowers you to automate the creation of essential project artifacts from requirements, such as user stories, features, epics, and business/system requirements. It allows you to quickly generate a set of standard test cases and BDD Gherkin scenarios that can then be refined and improved as needed. The functionality uses Inflectra.ai, our world-class Generative AI platform that leverages the power of Amazon Bedrock and Amazon Nova.

AI Using Inflectra.ai

SpiraTest includes an embedded generative and agentic AI capability called Inflectra.ai. Inflectra.ai is a cloud native feature that makes GenAI features easy to use and seamless, with a single click setup to activate within your instance of SpiraTest.

The Generative AI functionality in SpiraTest lets you:

- Create an entire product from start to finish by simply specifying a name and high-level product description.

- Take a requirement or user story and generate these common project artifacts:

- Decompose the requirement into smaller features and user stories

- BDD scenarios and Gherkin syntax

- Create test cases that the testing team can use to successfully test and validate the functionality being created.

- The test cases included details steps with expected behavior and relevant sample data

- Take an existing test case and perform the following actions:

- Reverse-engineer the requirements from the existing test case (useful if the team doesn’t have existing formal requirements documentation)

- Create detailed test steps if the test case didn’t already have them

- Analyze requirements against common frameworks such as EARS, and provide recommendations on how to improve

Artifact Generation Use Cases

For example, imagine that you have just created a new requirement or user story that consists of a single-line such as “As a user I want to book a train between two European cities” or “As a user I want to book a flight between two cities”.

Normally, you would now need to manually write the various test cases that cover this requirement, including positive (can log in successfully) and negative tests (failure to log in for various reasons). In addition, you would need to decompose this requirement in a set of lower-level development tasks for the developers to create the user interface, database, and other items that need to be in place to have a working login page.

If using a BDD approach, you might also want to create a set of BDD Gherkin scenarios that describe each use case for a login page more specifically. Finally, you would want to identify and document all potential risks associated with this new feature.

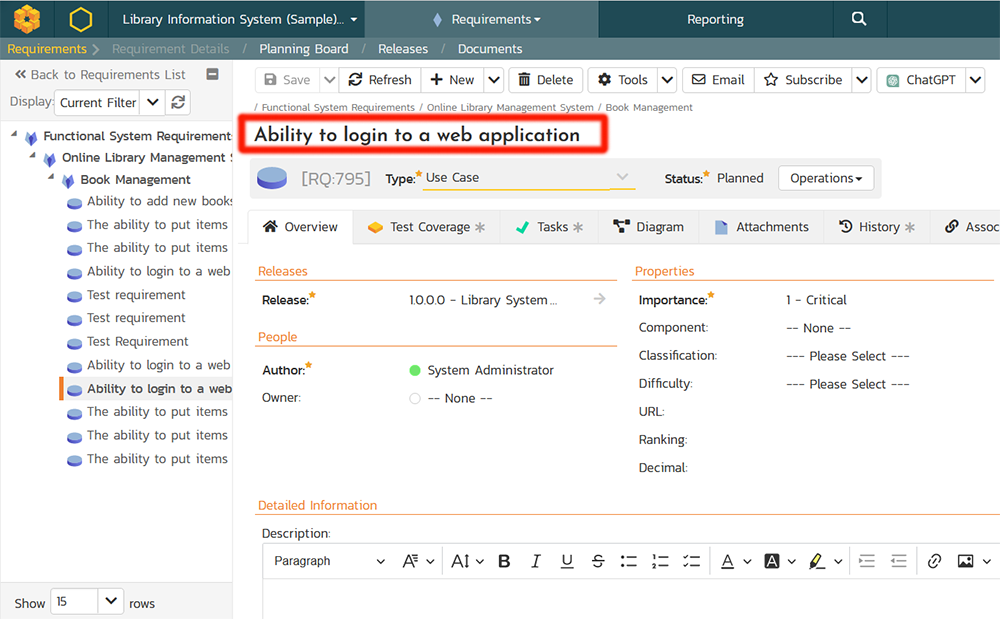

Using the power of Generative AI, you can radically streamline this approach using the new AI generation options available inside SpiraTest:

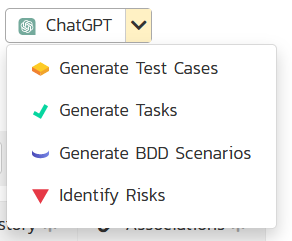

Generating Test Cases

When you click on the option to generate test cases, SpiraTest will use Inflectra.ai and create a set of test cases for the requirement in question. For our sample requirement, you can see that it has generated seven test cases, one for the positive case and six additional negative cases:

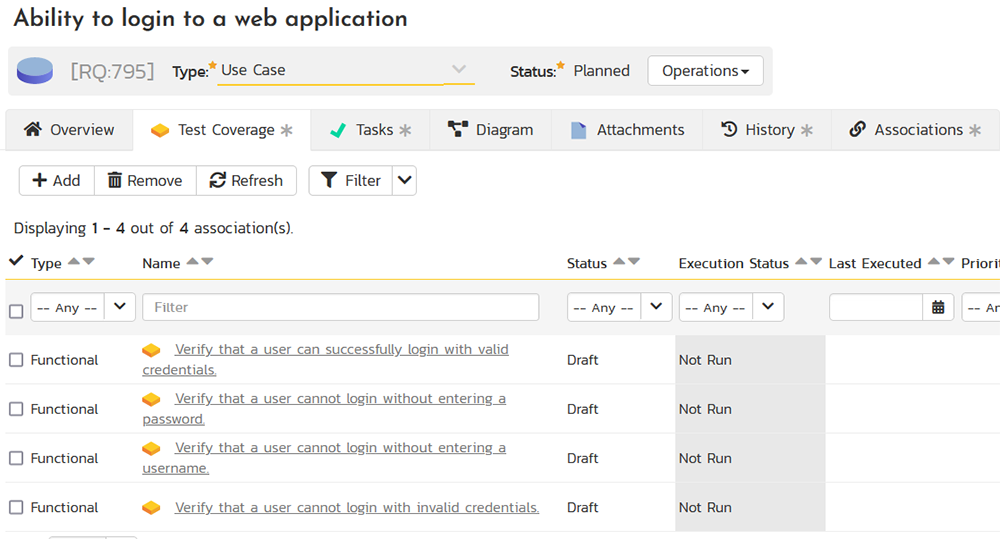

Each of the test cases consists of multiple detailed test steps that have a description of the action, the expected result if successful, and any sample data if relevant:

Note that the sample data will most likely be very notional since it does not know valid/invalid logins for your application. Still, the overall structure is correct and will save a lot of manual time writing and documenting the test cases.

In some cases, there may be test cases that have been created manually that are missing requirements. For example, the team imported some test cases from a spreadsheet or MS-Word document and now they need to reverse-engineer the requirements. The good news is that the AI assistant can help with this process as well:

When you click on the option to generate requirements, the system will create multiple requirements for the test case in question:

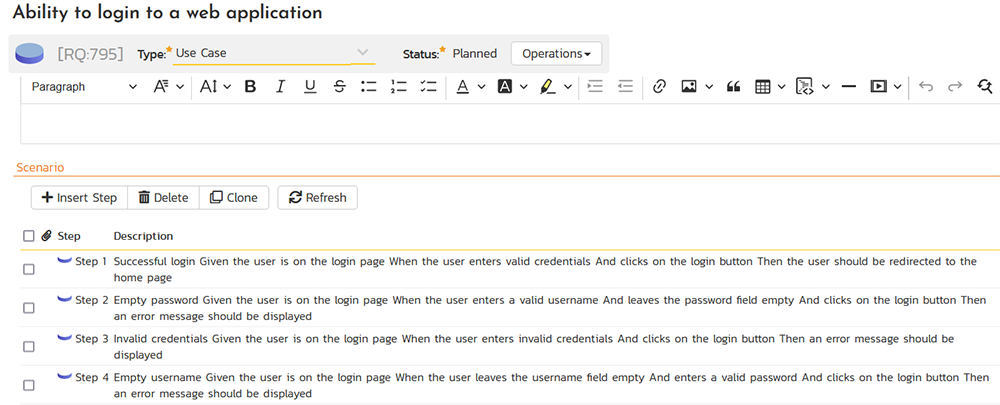

Generating BDD Scenarios

When using the Behavior Driven Development (BDD) methodology, it is useful to decompose the requirement or user story into different BDD scenarios, both positive and negative. Using the AI Assistant, you can generate a set of BDD scenarios for this requirement, written in the Gherkin syntax. The AI Assistant will automatically convert them into an easy-to-read format with bold for the Gherkin keywords and bullets separating out the components:

In this example, it has created four scenarios, one positive and three negative. Each is written in the Gherkin Given... When.... Then, format and is ready to use.

Generating User Stories and Features from Epics

This feature lets you take an existing high-level requirement, and use Inflectra.ai to automatically generate multiple child requirements based on the name and description of the requirement.

This can be used recursively, for example, we can take a single Epic and generate multiple Features for the Epic. We can then take the generated Features and then generate additional User Stories.

Generating Compliance Requirements from Industry Standards

When working on software projects in regulated industries, you often need to make sure the system complies with a variety of industry standards, not just the functional requirements desired by the product owner. Inflectra.ai makes this very straightforward, you can simply enter the name and description of the industry standard, and it will generate the necessary detailed requirements that the system must meet to fulfill this standard.

With this feature, you can now ensure your products can meet all regulatory requirements as well as functional specifications without needing to manually input large amounts of compliance documentation.

Create Draft Products

In addition to creating these individual items from existing artifacts, there is the option on the My Page to also create a whole SpiraTest product ‘from scratch’ using the Create new product wizard:

All you need to do is enter the name and high-level description of the product and Inflectra.ai will create the initial product with top-level requirements in a single action:

From there you can use the provided options to build out additional levels of product backlog, and/or create the test cases.

Analysis Use Cases

Analyzing Requirements for Quality

From the requirement details page you can analyze the requirement to see how well it is written and organized, based off specific frameworks. This provides valuable insights into the quality of the text and how effectively it may communicate its meaning to others. Currently we only support EARS, but we plan on supporting additional frameworks as well as "plain English"

The analysis includes a score from 1 to 5. A score of 5 means the requirement is very well written and does not need to be improved, while a score of 1 means lots of work is needed. Along with a score, detailed notes and guidance are provided about how to improve the requirement and why, as well as what is in good shape already.

Furthermore, Inflectra.ai will give you the option to automatically rewrite the requirement to improve it for you:

If you choose the option to improve a requirement, the built-in detailed change and audit tracking will ensure you understand exactly what changes were made by the AI:

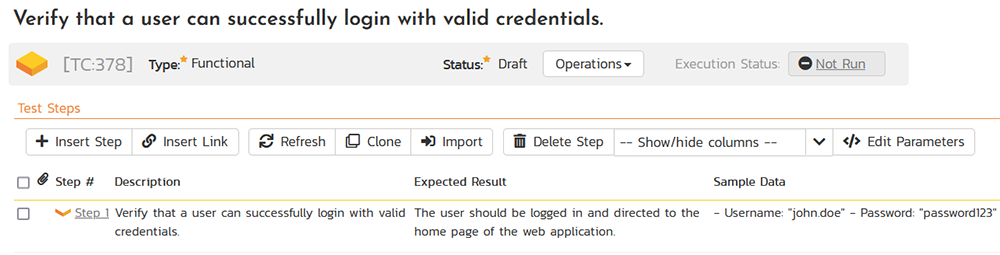

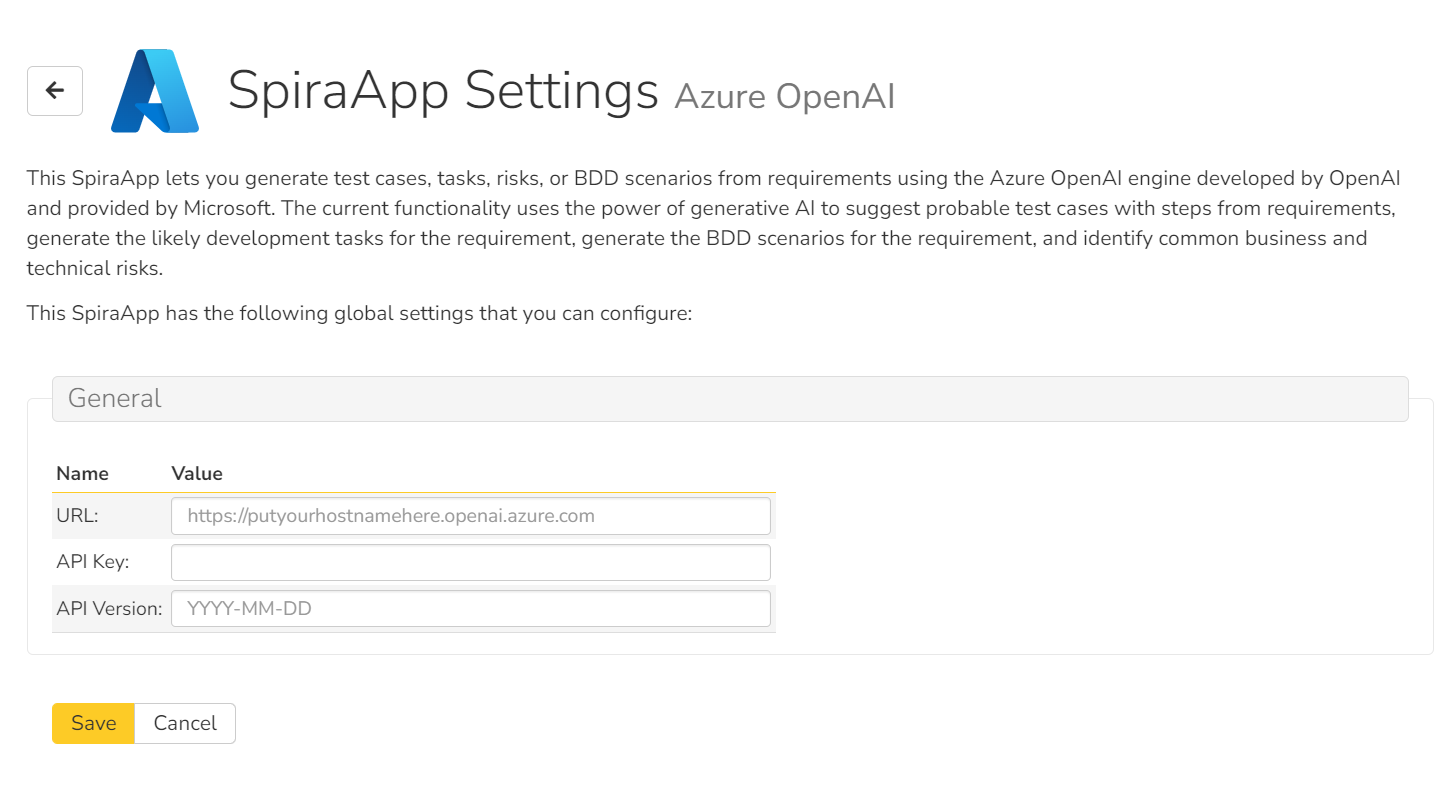

AI Functionality for On-Premise Customers

For those customers that are hosting SpiraTest on-premise or would simply prefer to use their own managed LLM with SpiraTest, Inflectra also provides multiple different “Bring Your Own LLM” options for use with SpiraTest, including Azure OpenAI, Amazon Bedrock and OpenAI:

These options make use of the SpiraApps platform, that lets customers deploy plugins into their instance of SpiraTest. This ensures that you keep control over your sensitive data, while at the same time benefiting from the productivity improvements of Generative AI.

Try SpiraTest free for 30 days, no credit cards, no contracts

Start My Free TrialAnd if you have any questions, please email or call us at +1 (202) 558-6885